ANTHROPOMORPHISM refers to the humanisation of artificial intelligence (AI).

AI developers are working to create humanlike AI technologies. These technologies resemble humans in both physical appearance and behaviour. They mimic human emotions and personality, exhibiting physical, personality, and emotional traits.

Additionally, anthropomorphism also looks at how we human beings relate to AI technologies in terms of our identity. For example, it looks at how when we are working or dealing with AI technologies, we invoke empathy and trust.

More so, anthropomorphism refers to the process of assigning humanlike motivations, emotions or characteristics to real or imagined non-human entities. This allows them to engage socially with human beings.

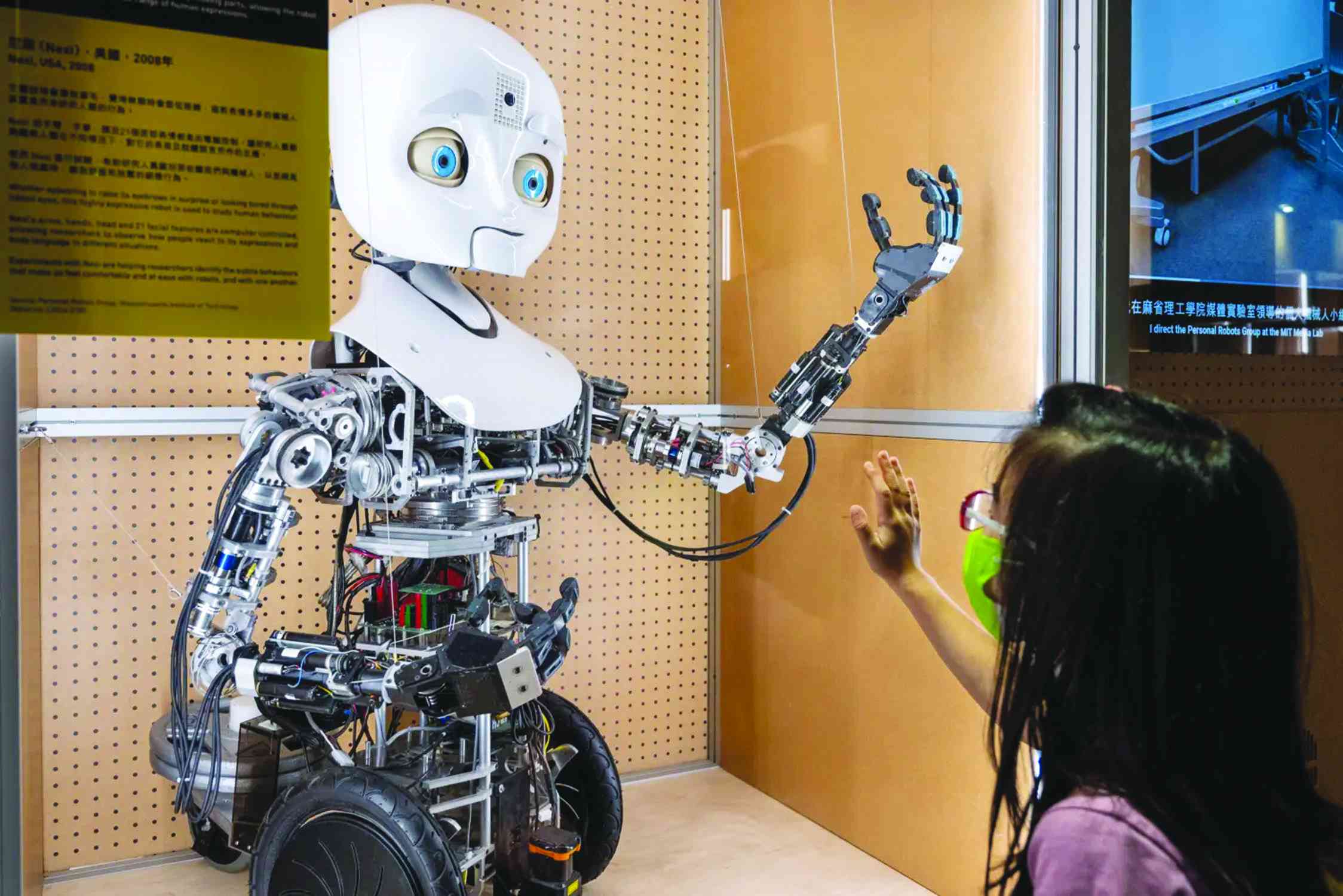

Today as more and more people use AI technologies, there is a tendency, if not an obsession to give AI applications agents and make them as realistic as possible. This, we can see in robots; I am sure if you take a look at today’s robots, you will realise that they are being made as close as possible to human beings.

This is being applied also in the way AI applications work, particularly how they can operate intelligently, including mechanical thinking and feeling.

As we take a closer look at AI applications, we can see they are also being used in emotional tasks, such as when chatbots are interacting with humans (customers) and convey empathy and even send condolence messages during their social interaction with customers.

More so, AI is used to anthropomorphise visual and audio functions to bring such products to life.

- Time running out for SA-based Zimbos

- Sally Mugabe renal unit disappears

- Letters: How solar power is transforming African farms

- Epworth eyes town status

Keep Reading

A good example of orthomorphism in AI is human appearance, gendered voice, gendered name, avatar customisation, gendered character, and interactive personality.

Prominent AI technologies, which have been assigned gendered names in Zimbabwe are Econet’s Yamurai, Steward Banks Batsi, and FBC’s Chido, among others. Elsewhere in the world, you have Amazon's Alexa (Mostly female names). Then some display human appearances for example IPsoft’s Amelia and interactive personalities such as Cleo.

So, what is the problem with anthropomorphism? The first problem is that it can be harmful. Here is what I want you to know dear readers; Today there is a tendency among humans, me included, to humanise these AI technologies or machines. Think of instances when you interact with AI chatbots such as ChatGPT, Claude, DeepSeek, Grok 3 and others.

Have you noticed you end up using words such as “can you please, please, thank you”, or “excellent”. This looks great consciously or unconsciously.

The politeness or appreciation of AI applications or machines in my view is completely uncalled for, irrelevant or unnecessary.

How is that so — in fact if you do not use these words, you will not hurt anyone’s feelings in any way whatsoever. You can just use raw imperatives and still get the same results (try it). However, this is how most people behave. A study we conducted showed that more than 75% of people who use AI applications tend to attribute some possibility of phenomenal consciousness to ChatGPT, POE, Meta AI, or even chatbots.

How is that so? First, it is inherent in human beings to attribute consciousness to AI systems they interact with frequently. People who use AI applications every day for assistance with writing, coding, or other activities tend to highly attribute some degree of phenomenality to the system of these AI applications.

Second, we as human beings tend to attribute agency to AI applications where there isn’t any at all. This comes from daily interaction with AI applications and the way they tend to use human-like language when interacting with us.

Third, in human interaction communication, whether simple or complex, will make sense only if we assign an agentive context. That is when we give the other part to the conservation agency.

This comes from the realisation that our language could have likely evolved as agents started sharing knowledge, thoughts, and feelings. Language is arguably one of the most direct and perceptible reflections of each other’s conscious minds.

Language works when we begin to perceive the other party’s ability to communicate in this way. When we do this, we are more likely to perceive them as agents with complex mental states (a key theme in the philosophy of language and philosophy of mind).

The other problem in anthropomorphising AI technologies is that we are creating a fertile ground for us to become dependent and anxious.

There have been many cases reported worldwide where over-dependency on AI chatbots ended in tragic circumstances. In some cases, involving AI chatbots has led to unexpected behaviour and tragic outcomes where some individuals committed suicide after realising they were in love with machines.

Additionally, anthropomorphism leads to too much trust in AI systems. What is the problem with that? Trusting unpredictable AI technologies or machines is not safe at all, as this can lead to dangerous outputs, such as manipulation or blatant misinformation. In my previous articles, I have talked about how AI systems sometimes hallucinate; so, when one trusts they end up becoming dependent on lying AI applications. Have you ever noticed that AI applications will always answer even if it is wrong, have you ever seen them saying I don’t know?

Another argument is that anthropomorphism in AI is extremely deceptive, if not dangerous. At the same time, it is also completely unavoidable.

Let me take you through a bit of history. Illusionism dates back to ancient times, and it then gained so much prominence in the early twentieth century when we started seeing an unprecedented evolution and rise of “robots”.

So, there is no way we can expect human beings to all of a sudden put aside the unrealistic fantasy. One thing that is for sure is that with any rise to new technology, people immediately want to put it to sexualised use.

Have you asked yourself why most robotic bodies are women? The male robotic bodies when they are used are mostly in the form of soldiers or warriors of some sort.

Did you know that when photography was first invented, one of the first and most popular uses was for pornography? So do you know where AI can lead, your guess can be as good as mine, especially with the coming hyper-illusionistic effects.

Maybe we make it the subject of another article I am quite sure the results will lead to some sort of sexualisation of AI technologies.

One thing I can say about anthropomorphism is that if most of us study Philosophy of Mind, I am quite sure we would not be this delusional as assigning consciousness to AI technologies and developing misguided trust. More so, it is morally wrong and may infringe on care recipients’ autonomy and human dignity.

The baseline for ethical use of AI is to ensure the obvious distinction between humans and AI. Blurring the lines is a recipe for bad outcomes.

- Sagomba is a chartered marketer, policy researcher, AI governance and policy consultant, ethics of war and peace research consultant. — Email: [email protected]; LinkedIn: @Dr. Evans Sagomba; X: @esagomba.